注:本文档仅为石家庄铁道大学学生使用。

Only for STDU Students.

源代码地址:https://github.com/Davidham3/STSGCN

一、确定运行环境

通过查看STSGCN的文档可以知道,运行环境为docker镜像的mxnet_1.41_cu100

但是在2022年5月左右,mxnet在docker的发布者将旧版本镜像全部删除,1.4.1版本不再可用,因此需要寻找替代方案。

注:新版镜像附带的是mxnet-cu100==1.9.1,该版本与STSGCN不兼容,运行会报错。

替代方案:通用深度学习镜像pytorch

https://hub.docker.com/r/floydhub/pytorch/tags

经过本人的多次测试,只有1.4.0-gpu.cuda10cudnn7-py3.54这个版本的镜像附带的是CUDA10.0,也就是和mxnet-cu100匹配的CUDA驱动版本,其它版本都不是CUDA10.0,也就无法安装对应的mxnet-cu100 Python包

二、按要求放置代码所需的数据集文件并上传服务器

1.下载数据集文件

根据文档提示我们要从https://pan.baidu.com/share/init?surl=ZPIiOM__r1TRlmY4YGlolw

2.下载代码文件(github)

3.在自己的电脑解压代码和数据集文件,将data文件夹放在代码根目录中

文件夹结构目录树如下:

C:.

│ .gitignore

│ load_params.py

│ main.py

│ pytest.ini

│ README.md

│ requirements.txt

│ utils.py

│

├─config

│ ├─PEMS03

│ │ individual_GLU_mask_emb.json

│ │ individual_GLU_nomask_emb.json

│ │ individual_GLU_nomask_noemb.json

│ │ individual_relu_nomask_noemb.json

│ │ sharing_relu_nomask_noemb.json

│ │

│ ├─PEMS04

│ │ individual_GLU.json

│ │ individual_GLU_mask_emb.json

│ │ individual_relu.json

│ │ sharing_GLU.json

│ │ sharing_relu.json

│ │

│ ├─PEMS07

│ │ individual_GLU_mask_emb.json

│ │

│ └─PEMS08

│ individual_GLU_mask_emb.json

│

├─data

│ ├─PEMS03

│ │ PEMS03.csv

│ │ PEMS03.npz

│ │ PEMS03.txt

│ │ PEMS03_data.csv

│ │

│ ├─PEMS04

│ │ PEMS04.csv

│ │ PEMS04.npz

│ │

│ ├─PEMS07

│ │ PEMS07.csv

│ │ PEMS07.npz

│ │

│ └─PEMS08

│ PEMS08.csv

│ PEMS08.npz

│

├─docker

│ Dockerfile

│

├─models

│ stsgcn.py

│ __init__.py

│

├─pai_jobs

│ ├─PEMS03

│ │ individual_GLU_mask_emb.pai.yaml

│ │ individual_GLU_nomask_emb.pai.yaml

│ │ individual_GLU_nomask_noemb.pai.yaml

│ │ individual_relu_nomask_noemb.pai.yaml

│ │ sharing_relu_nomask_noemb.pai.yaml

│ │

│ ├─PEMS04

│ │ individual_GLU_mask_emb.pai.yaml

│ │

│ ├─PEMS07

│ │ individual_GLU_mask_emb.pai.yaml

│ │

│ └─PEMS08

│ individual_GLU_mask_emb.pai.yaml

│

├─paper

│ AAAI2020-STSGCN.pdf

│ STSGCN_poster.pdf

│

└─test

test_stsgcn.py注:data下不要有二级文件夹,直接就是PEMS开头的数据及文件夹!

4.将放入data后的文件夹重新打包,上传服务器

5.在服务器解压,再次注意解压后不要有多层文件夹!!

三、在HPC安装镜像并安装所需Python包

1.在自己创建的用于存放镜像的文件夹执行镜像编译命令(若已有镜像无需进行此步骤):

singularity pull docker://floydhub/pytorch:1.4.0-gpu.cuda10cudnn7-py3.54

2.进入镜像,确认CUDA版本为10.0.xxx

singularity shell pytorch_1.4.0-gpu.cuda10cudnn7-py3.54.sif

cat /usr/local/cuda/version.txt

-bash-4.2$ singularity shell pytorch_1.4.0-gpu.cuda10cudnn7-py3.54.sif

Singularity pytorch_1.4.0-gpu.cuda10cudnn7-py3.54.sif:~/mxnet> cat /usr/local/cuda/version.txt

CUDA Version 10.0.130 3.安装代码所需python包

在代码的requirements.txt中可以看到所需Python包

请不要使用一键安装命令让Python自己调用requirements文件,这样不可以指定版本!!!

1)首先查看镜像中自带的包

pip list

可以发现没有所需的mxnet-cu100包,但是这个包是需要指定版本的,版本为上文提到的1.4.1

2)安装mxnet-cu100==1.4.1

pip install mxnet-cu100==1.4.1

若速度过慢,可更换国内镜像源

pip install mxnet-cu100==1.4.1 -i https://pypi.tuna.tsinghua.edu.cn/simple/

若提示权限不足等问题,使用--user命令

pip install --user mxnet-cu100==1.4.1 -i https://pypi.tuna.tsinghua.edu.cn/simple/

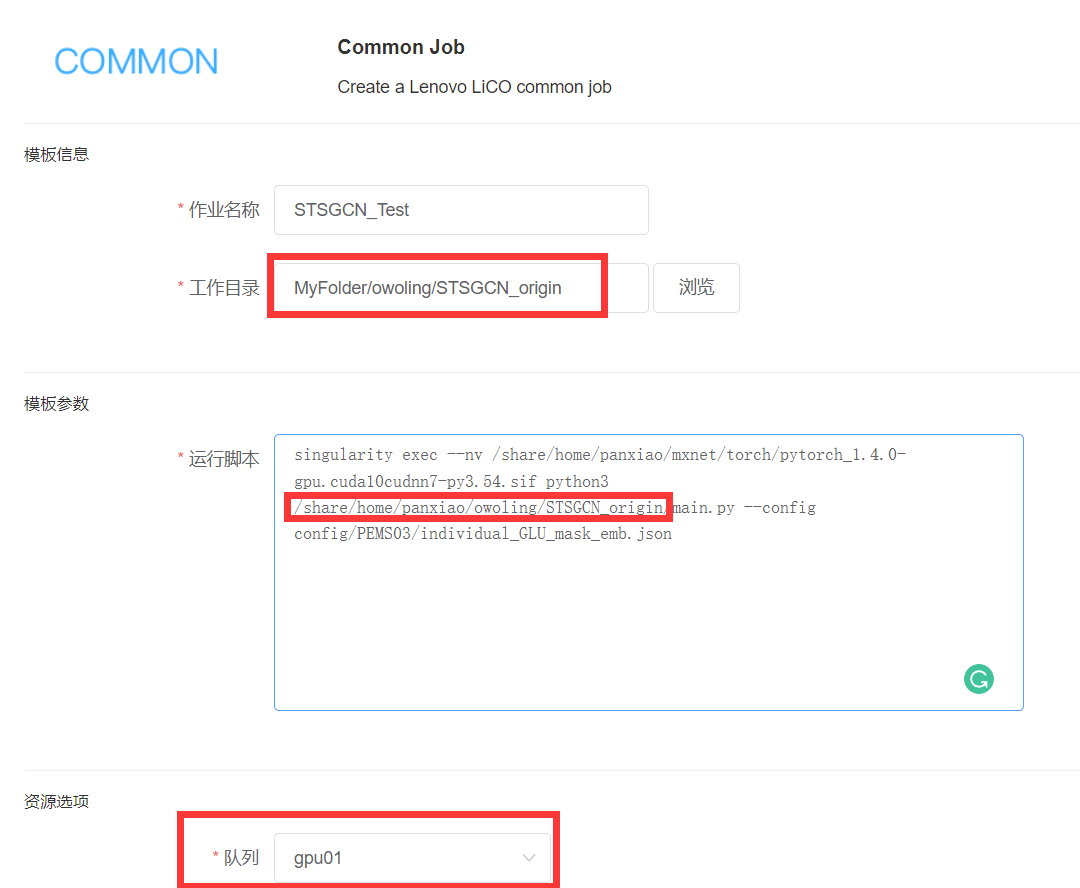

四、在web控制台创建作业,尝试首次运行

提交作业处选择Common Job

1.作业名称建议不要乱写,写自己运行项目相关的名字

2.工作目录是输出结果的目录,建议与代码路径保持一致

3.运行脚本,下方是我的。请灵性替换为你自己的目录路径,此外要注意python3

singularity exec --nv /share/home/panxiao/mxnet/torch/pytorch_1.4.0-gpu.cuda10cudnn7-py3.54.sif python3 /share/home/panxiao/owoling/STSGCN_origin/main.py --config config/PEMS03/individual_GLU_mask_emb.json

4.队列选GPU01或GPU02

因为STSGCN属于显卡学习类项目,因此需要选择可以调用显卡的队列

5.提交

五、查看输出结果

在作业列表点击运行中的作业可以查看正在输出的结果,每一次epoch会有一次输出

完整运行完后可以在已完成查看完整输出

部分输出结果如下:

training: Epoch: 195, RMSE: 22.26, MAE: 13.88, time: 114.98 s

validation: Epoch: 195, loss: 15.91, time: 125.93 s

training: Epoch: 196, RMSE: 22.25, MAE: 13.88, time: 114.94 s

validation: Epoch: 196, loss: 15.91, time: 125.87 s

training: Epoch: 197, RMSE: 22.25, MAE: 13.88, time: 114.97 s

validation: Epoch: 197, loss: 15.91, time: 125.93 s

training: Epoch: 198, RMSE: 22.25, MAE: 13.88, time: 115.56 s

validation: Epoch: 198, loss: 15.91, time: 126.57 s

training: Epoch: 199, RMSE: 22.25, MAE: 13.88, time: 115.81 s

validation: Epoch: 199, loss: 15.91, time: 126.81 s

training: Epoch: 200, RMSE: 22.25, MAE: 13.88, time: 115.69 s

validation: Epoch: 200, loss: 15.91, time: 126.70 s

step: 139

training loss: 14.08

validation loss: 15.88

tesing: MAE: 17.28

testing: MAPE: 16.44

testing: RMSE: 29.21

[139, 14.080880844908673, 15.879517380553997, 17.275275403677266, 16.440598834937877, 29.20561195447598, [(13.49049721362016, 13.612122893400919, 21.630752690768713), (14.0600615112543, 14.003408920252877, 22.862788056168448), (14.513224131586234, 14.340502055925604, 23.7905257877543), (14.895920078789274, 14.61337253607374, 24.599208340866646), (15.23287733045022, 14.879751671327798, 25.285979740801185), (15.5545385908317, 15.121839666269299, 25.934192028006414), (15.8663616815247, 15.365095202719997, 26.54343430198142), (16.1678652298019, 15.582826985578095, 27.133346725126206), (16.453069354125805, 15.788026783503634, 27.683458861671554), (16.728988342405152, 15.997320420994887, 28.204877842562553), (16.999029055522374, 16.214119541882983, 28.70540361835054), (17.275275403677266, 16.440598834937877, 29.20561195447598)]]

job end time is Tue Aug 2 05:51:33 CST 2022

------------------------------------------------------------

Sender: LSF System <lsfadmin@c29>

Subject: Job 29289: <stsgcn_140torch> in cluster <cluster1> Done

Job <stsgcn_140torch> was submitted from host <m01> by user <panxiao> in cluster <cluster1> at Mon Aug 1 17:42:04 2022

Job was executed on host(s) <c29>, in queue <gpu01>, as user <panxiao> in cluster <cluster1> at Mon Aug 1 17:42:05 2022

</share/home/panxiao> was used as the home directory.

</share/home/panxiao/owoling/stsgcn_origin> was used as the working directory.

Started at Mon Aug 1 17:42:05 2022

Terminated at Tue Aug 2 05:51:33 2022

Results reported at Tue Aug 2 05:51:33 2022

Your job looked like:

------------------------------------------------------------

# LSBATCH: User input

#!/bin/bash

#BSUB -J STSGCN_140torch

#BSUB -q gpu01

#BSUB -cwd /share/home/panxiao/owoling/STSGCN_origin

#BSUB -o STSGCN_140torch-1659270455033.out

#BSUB -e STSGCN_140torch-1659270455033.out

#BSUB -n 1

#BSUB -R span[ptile=1]

echo job start time is `date`

echo `hostname`

singularity exec --nv /share/home/panxiao/mxnet/torch/pytorch_1.4.0-gpu.cuda10cudnn7-py3.54.sif python3 /share/home/panxiao/owoling/STSGCN_origin/main.py --config config/PEMS03/individual_GLU_mask_emb.json

echo job end time is `date`

------------------------------------------------------------

Successfully completed.

Resource usage summary:

CPU time : 47020.08 sec.

Max Memory : 4239 MB

Average Memory : 3585.81 MB

Total Requested Memory : -

Delta Memory : -

Max Swap : -

Max Processes : 5

Max Threads : 113

Run time : 43768 sec.

Turnaround time : 43769 sec.

The output (if any) is above this job summary.在job end time is Tue Aug 2 05:51:33 CST 2022下方输出的内容与代码无关,是类似运行日志的输出。

六、部分报错及解决方法

1.显存不足

报错如下:

The output (if any) is above this job summary.

job start time is Mon Aug 1 00:01:54 CST 2022

c27

{

"act_type": "GLU",

"adj_filename": "data/PEMS03/PEMS03.csv",

"batch_size": 32,

"ctx": 0,

"epochs": 200,

"filters": [

[

64,

64,

64

],

[

64,

64,

64

],

[

64,

64,

64

],

[

64,

64,

64

]

],

"first_layer_embedding_size": 64,

"graph_signal_matrix_filename": "data/PEMS03/PEMS03.npz",

"id_filename": "data/PEMS03/PEMS03.txt",

"learning_rate": 0.001,

"max_update_factor": 1,

"module_type": "individual",

"num_for_predict": 12,

"num_of_features": 1,

"num_of_vertices": 358,

"optimizer": "adam",

"points_per_hour": 12,

"spatial_emb": true,

"temporal_emb": true,

"use_mask": true

}

The shape of localized adjacency matrix: (1074, 1074)

(15701, 12, 358, 1) (15701, 12, 358)

(5219, 12, 358, 1) (5219, 12, 358)

(5219, 12, 358, 1) (5219, 12, 358)

Traceback (most recent call last):

File "/share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/symbol/symbol.py", line 1523, in simple_bind

ctypes.byref(exe_handle)))

File "/share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/base.py", line 252, in check_call

raise MXNetError(py_str(_LIB.MXGetLastError()))

mxnet.base.MXNetError: [00:02:14] src/storage/./pooled_storage_manager.h:151: cudaMalloc failed: out of memory

Stack trace returned 10 entries:

[bt] (0) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x3f935a) [0x2b23e946635a]

[bt] (1) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x3f9981) [0x2b23e9466981]

[bt] (2) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x350f15b) [0x2b23ec57c15b]

[bt] (3) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x35138cf) [0x2b23ec5808cf]

[bt] (4) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(+0x4306f9) [0x2b23e949d6f9]

[bt] (5) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(mxnet::NDArray::NDArray(nnvm::TShape const&, mxnet::Context, bool, int)+0x7fa) [0x2b23e949e08a]

[bt] (6) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(void std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >::_M_emplace_back_aux<nnvm::tshape const&, mxnet::Context const&, bool, int const&>(nnvm::TShape const&, mxnet::Context const&, bool&&, int const&)+0xda) [0x2b23ebe571ca]

[bt] (7) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(mxnet::common::EmplaceBackZeros(mxnet::NDArrayStorageType, nnvm::TShape const&, mxnet::Context const&, int, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*)+0x365) [0x2b23ebe57855]

[bt] (8) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(mxnet::exec::GraphExecutor::InitArguments(nnvm::IndexedGraph const&, std::vector<nnvm::tshape, std::allocator<nnvm::TShape> > const&, std::vector<int, std::allocator<int> > const&, std::vector<int, std::allocator<int> > const&, std::vector<mxnet::context, std::allocator<mxnet::Context> > const&, std::vector<mxnet::context, std::allocator<mxnet::Context> > const&, std::vector<mxnet::context, std::allocator<mxnet::Context> > const&, std::vector<mxnet::opreqtype, std::allocator<mxnet::OpReqType> > const&, std::unordered_set<std::string, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::string> > const&, mxnet::Executor const*, std::unordered_map<std::string, mxnet::NDArray, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::pair<std::string const, mxnet::NDArray> > >*, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*)+0xcbc) [0x2b23ebe58c7c]

[bt] (9) /share/home/panxiao/.local/lib/python3.6/site-packages/mxnet/libmxnet.so(mxnet::exec::GraphExecutor::Init(nnvm::Symbol, mxnet::Context const&, std::map<std::string, mxnet::Context, std::less<std::string>, std::allocator<std::pair<std::string const, mxnet::Context> > > const&, std::vector<mxnet::context, std::allocator<mxnet::Context> > const&, std::vector<mxnet::context, std::allocator<mxnet::Context> > const&, std::vector<mxnet::context, std::allocator<mxnet::Context> > const&, std::unordered_map<std::string, nnvm::TShape, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::pair<std::string const, nnvm::TShape> > > const&, std::unordered_map<std::string, int, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::pair<std::string const, int> > > const&, std::unordered_map<std::string, int, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::pair<std::string const, int> > > const&, std::vector<mxnet::opreqtype, std::allocator<mxnet::OpReqType> > const&, std::unordered_set<std::string, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::string> > const&, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*, std::vector<mxnet::ndarray, std::allocator<mxnet::NDArray> >*, std::unordered_map<std::string, mxnet::NDArray, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::pair<std::string const, mxnet::NDArray> > >*, mxnet::Executor*, std::unordered_map<nnvm::nodeentry, mxnet::NDArray, nnvm::NodeEntryHash, nnvm::NodeEntryEqual, std::allocator<std::pair<nnvm::NodeEntry const, mxnet::NDArray> > > const&)+0x76d) [0x2b23ebe66f5d]乱七八糟的不用看,重点看这句

src/storage/./pooled_storage_manager.h:151: cudaMalloc failed: out of memory

出现这句话代表集群的显存已满,只能等待管理员释放资源或其他人运行完成。

2.mxnet版本不匹配

报错如下:

Traceback (most recent call last):

File "/share/home/panxiao/owoling/STSGCN_origin/main.py", line 27, in <module>

net = construct_model(config)

File "/share/home/panxiao/owoling/STSGCN_origin/utils.py", line 37, in construct_model

init=mx.init.Constant(value=adj_mx.tolist()))

File "/share/home/panxiao/.local/lib/python3.5/site-packages/mxnet/symbol/symbol.py", line 2804, in var

init = init.dumps()

File "/share/home/panxiao/.local/lib/python3.5/site-packages/mxnet/initializer.py", line 478, in dumps

self._kwargs['value'] = val.tolist() if isinstance(val, np.ndarray) else val.asnumpy().tolist()

AttributeError: 'list' object has no attribute 'asnumpy'

job end time is Wed Jul 27 19:13:44 CST 2022从报错中看不出是什么问题,但是就是mxnet版本与要求不匹配。这是本人尝试了无数次终于得出的结论(血的教训)

安装mxnet-cu100==1.4.1即可解决

如有其它问题欢迎前来询问!

您好,可以问一下具体的操作步骤是怎样操作的吗

您好我想问一下

30系的卡不支持cuda10是不是没法跑

@yucio 啊?不支持cuda10,那应该是不能跑。他这个程序过了这么久很多代码都废弃了,不管是什么的新版本都跑不了,限制的死死的,感觉是个天坑!